file.create("hello.R")3 …writing R scripts

3.1 The limitations of no-code applications

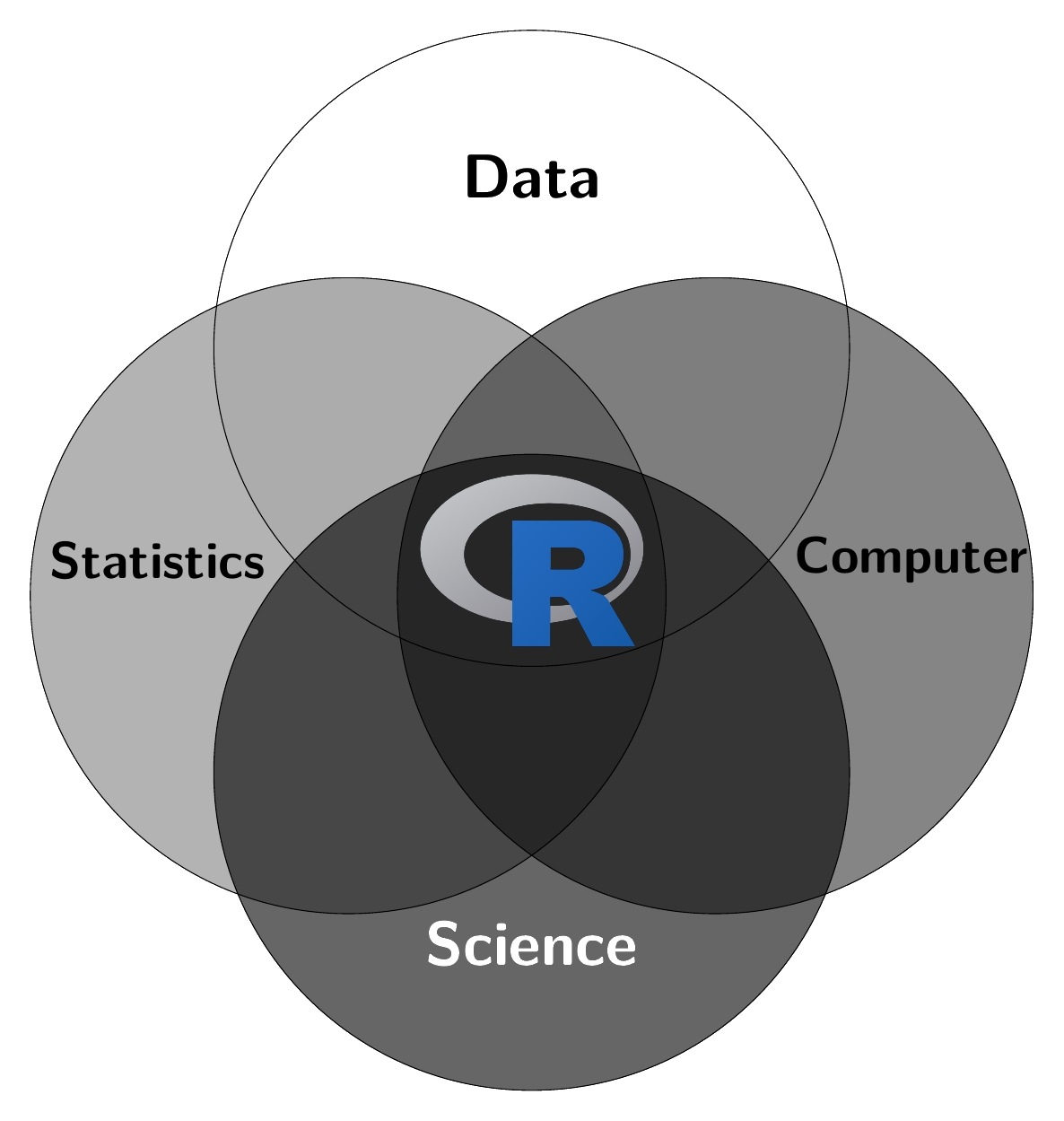

No-Code Applications (NCA) such as Microsoft Excel, RapidMiner, KNIME, DataRobot, Tableau, Microsoft Power BI, and Google AutoML are popular for good reasons. They enable the application of advanced empirical methods with no or minimal programming effort. Their intuitive graphical user interfaces comes with pre-built templates and drag-and-drop functionality which helps to get things done quick, without having to study the programm documentation for hours. Despite their apparent ease of use and efficiency, these platforms come with several disadvantages compared to traditional ways of working with computer by scripting and coding. Understanding these weaknesses helps to see why professional researchers, especially those actively publishing in academic journals, tend to rely on scripting languages like R and Python. These programming languages offer full control, are customizeable and extendable, offer extensive opportunities for automation and reproducibility, and are often better suited for demanding data science tasks.

- NCA lack flexibility and are limited in their adaptability.

- NCA often have problems scaling with increasing data volumes or user requirements.

- NCA are often closed systems that make it difficult to integrate other systems or applications.

- NCA often bind a company to a specific ecosystem. This can lead to dependencies and vendor lock-in.

- NCA can obscure the underlying logic of how applications work, which can make troubleshooting more difficult.

- NCA abstracts the coding process and prevents users from understanding fundamental concepts that could be beneficial to their professional growth and ability to tackle more complex problems.

In summary, while low-code and no-code platforms offer quick deployment and ease of use, they can lack the depth, flexibility, and control provided by traditional scripting. Researchers and businesses must consider these trade-offs, especially when planning for long-term scalability, complex customizations, or in-depth integrations.

Suppose you work with a spreadsheet software like Excel. You import a CSV file using the implemented import tool, you save the converted file. You notice that Excel has messed up the dates during the import, so you spend a few minutes cleanig that manually. Then, you visualize the data and you save the visualisations in various tabs. Maybe, you can spot some outliers and you document in footnote that these outliers are the result of some issues with the raw data. As these errors cannot be solved, you delete the observations and variables that contain a significant amount of errors. Finally, you use filters to calculate some summary statistics. All your results are written in a new tab. You’re convinced that you’ve done a great job. However, you send the file to your supervisor and you ask him for her opinion.

- She probably asks you what you have done to the data. How can you efficiently and completely communicate that?

- She comes back to you some time later and asks you to do the same analysis with an updated version of the data. How can you exactly redo the analysis and how long do you think it will take you to complete the job?

- She finds an error in your work or she has an idea to improve your analysis by making a few adjustments. Can you implement the adjustments easily, or do you have to redo everything from scratch?

- She sends you back the file with the comment that she has worked out some things in the file and now everything should be fine. How do you know what she has done to the data?

To say it in the words of Stephenson (2023, sec. 5.1):

“Spreadsheets are a nightmare for quality control and reproducibility, and you should always think twice before using one. Spreadsheets will always be a handy way to manipulate tabular datasets, and you’ll probably find them useful for data collection and quick back-of-the-envelope calculations, but they’re often more trouble than they’re worth.”

3.2 R Scripts: Why they are useful

I have already discussed in Section 1.7 that you can run code either directly by typing your code into the console of R or by writing a script and then sending the code with Ctrl+Enter or with the Run button to the console of R. Typing functions into the console to run code may seem simple, but this interactive style has limitations:

- Typing commands one at a time can be cumbersome and time-consuming.

- It’s hard to save your work effectively.

- Going back to the beginning when you make a mistake is annoying.

- You can’t leave notes for yourself.

- Reusing and adapting analyses can be difficult.

- It’s hard to do anything except the basics.

- Sharing your work with others can be challenging.

That’s where having a transcript of all the code, which can be re-run and edited at any time, becomes useful. An R script is just plain text that is interpreted as code or as a comment if the text follows a hastag #. A script comes with important advantages.

Scripts…

- … provide a record of everything you did during your data analysis.

- … can easily be edited and re-run.

- … allow you to leave notes for yourself.

- … make it easy to reuse and adapt analyses.

- … allow you to do more complex analyses.

- … make it easy to share your work with others.

3.3 Create, write, and run R scripts

3.3.1 Create

- Use the menu:

File > New File > R Script - Use the keyboard shortcut:

Ctrl+Shift+N(Windows/Linux) orCmd+Shift+N(Mac) or - Type the following in the console:

In the first two ways, a new R script window will open which can be edited and should be saved either by clicking on the File menu and selecting Save, clicking the disk icon, or by using the shortcut Ctrl+S (Windows/Linux) or Cmd+S (Mac). If you go for the third way, you need to open it manually.

3.3.2 Write

Regardless of your preferred way of generating a script, we can now start writing our first script:

x <- "hello world"

print(x)Then save the script using the menus (File > Save) as hello.R.

The above lines of code do the following:

- With the assignment operator

<-we create an object that stores the words “hello world” in an object entitledx. In Section 7.1.1 the assignment operator is further explained. - With the third input we print the content of the object

x.

3.3.3 Run

So how do we run the script? Assuming that the hello.R file has been saved to your working directory, then you can run the script using the following command:

source( "hello.R" )Suppose you saved the script in a sub-folder called scripts of your working directory, then you need to run the script using the following command:

source("./scripts/hello.R") Just note that the dot, ., means the current folder. Instead of using the source function, you can click on the source button in Rstudio.

With the character # you can write a comment in a script and R will simply ignore everything that follows in that line onwards.

3.4 What to do at the header of each script

At the beginning of each script, ensure that all required packages are loaded correctly. Use the pacman package, which provides the p_load() function to load and, if necessary, install packages, and the p_unload(all) function to unload all packages. Additionally, set your working directory with setwd() and clear all objects from the environment with rm(list = ls()). This ensures that everything in the environment after sourcing the script originates from the script itself. Below is the code that I use at the beginning of all my scripts. I recommend you do the same.

if (!require(pacman)) install.packages("pacman")

pacman::p_unload(all)

pacman::p_load(tidyverse, janitor)

setwd("~/your-directory/")

rm(list = ls())