7 Conditional events

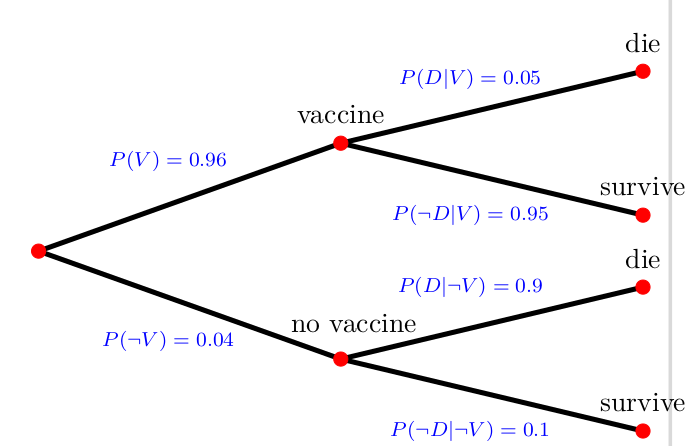

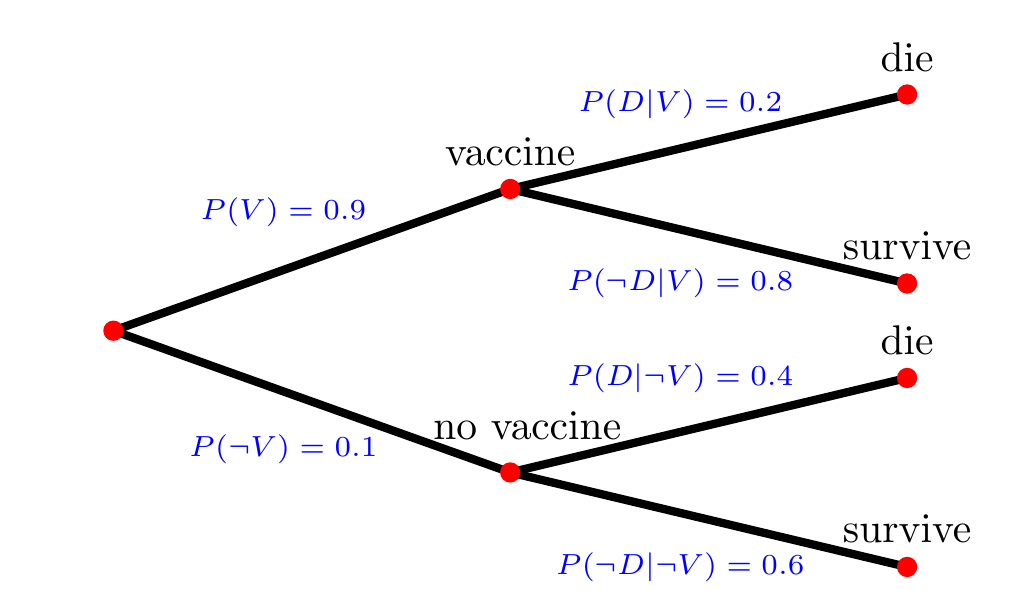

When dealing with probabilities, especially conditional probabilities, relying on intuition and gut feeling often leads to poor decision-making. Our judgments are biased, and the choices we make are usually less rational than we may believe. Exercise 7.1 provides some evidence for my claim. Before I discuss how conditional probabilities can be considered in a rational decision making process, I repeat the essential basics of stochastics in Section 7.1

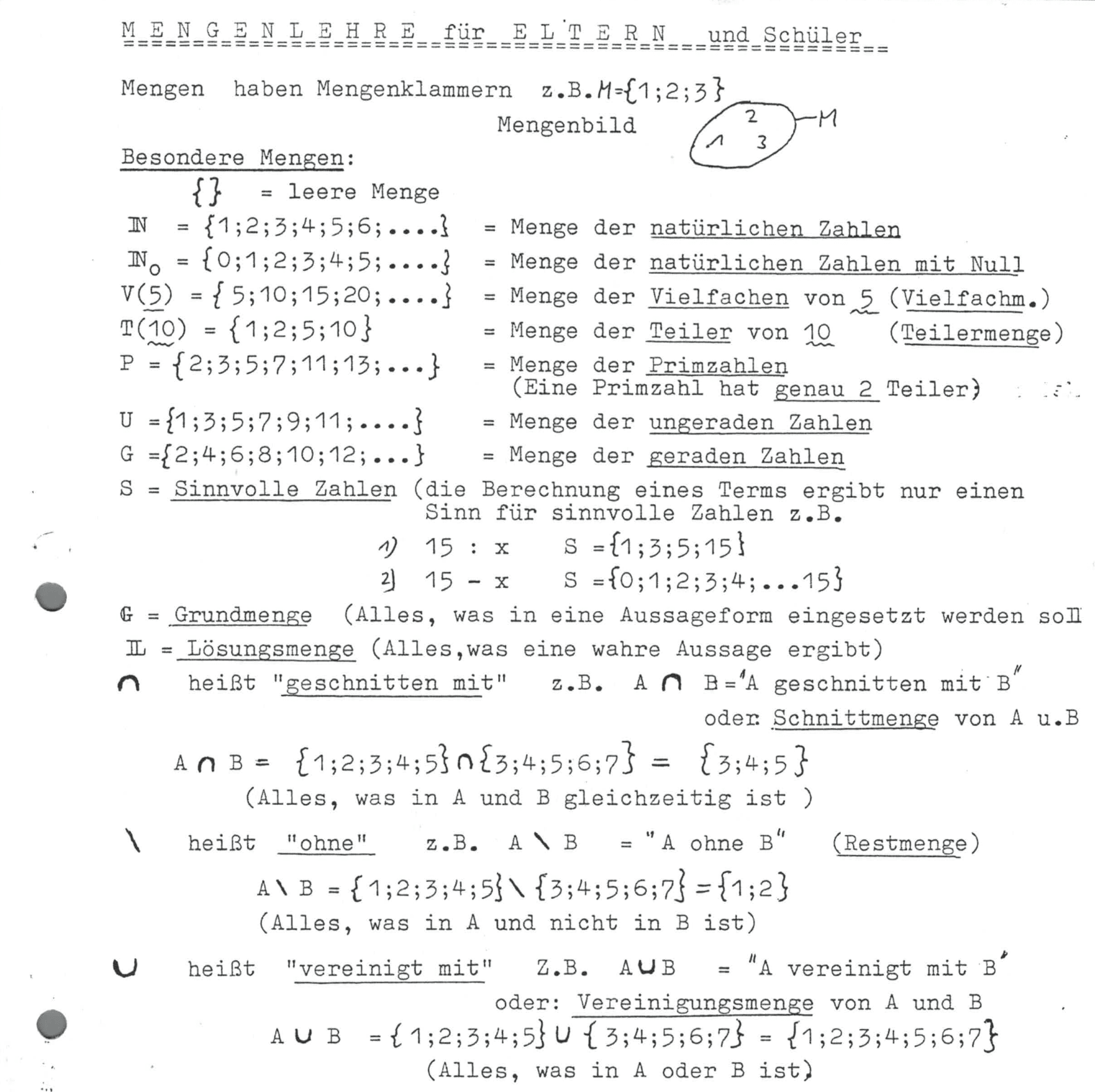

7.1 Terminology: \(P(A)\), \(P(A|B)\), \(\Omega\), \(\cap\), \(\neg\), …

7.1.1 Sample space

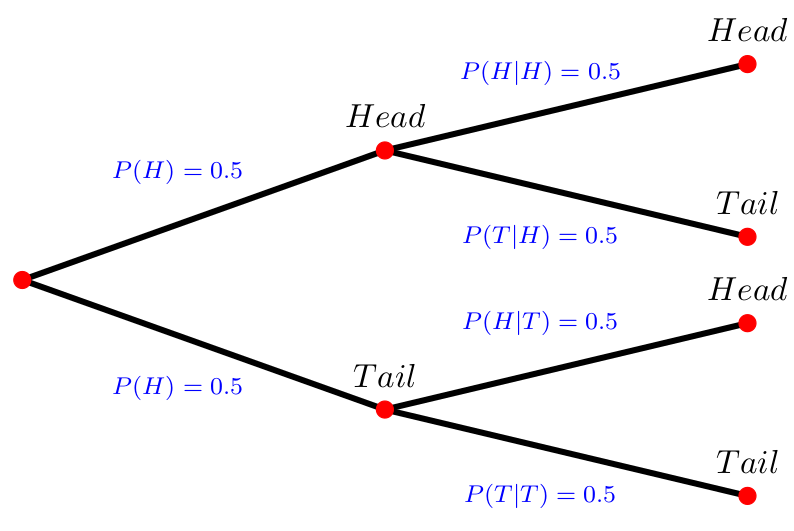

A result of an experiment is called an outcome. An experiment is a planned operation carried out under controlled conditions. Flipping a fair coin twice is an example of an experiment. The sample space of an experiment is the set of all possible outcomes. The Greek letter \(\Omega\) is often used to denote the sample space. For example, if you flip a fair coin, \(\Omega = \{H, T\}\) where the outcomes heads and tails are denoted with \(H\) and \(T\), respectively.

Overall, there are three ways to represent a sample space:

- to list the possible outcomes (see Exercise 7.3),

- to create a tree diagram (see Figure 7.3), or

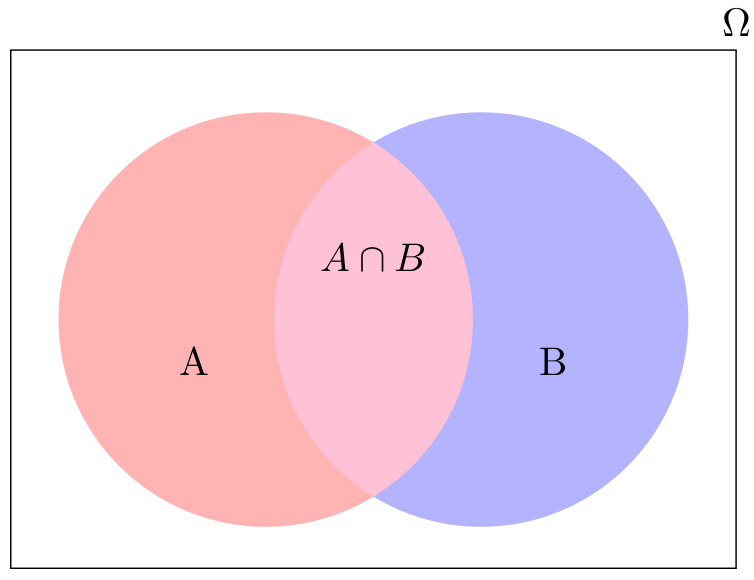

- to create a Venn diagram (see Figure 7.4).

7.1.2 Probability

Probability is a measure that is associated with how certain we are of outcomes of a particular experiment or activity. The probability of an event \(A\), written \(P(A)\), is defined as \[ P(A)=\frac{\text{Number of outcomes favorable to the occurrence of } A}{\text{Total number of equally likely outcomes}}=\frac{n(A)}{n(\Omega)} \]

For example, A dice has 6 sides with 6 different numbers on it. In particular, the set of elements of a dice is \(M=\{1,2,3,4,5,6\}\). Thus, the probability to receive a 6 is 1/6 because we look for one wanted outcome in six possible outcomes.

7.1.2.1 The complement of an event (\(\neg-Event\))

The complement of event \(A\) is denoted with a \(\neg A\) or sometimes with a superscript `c’ like \(A^c\). It consists of all outcomes that are not in \(A\). Thus, it should be clear that \(P(A) + P(\neg A) = 1\). For example, let the sample space be \[\Omega = \{1, 2, 3, 4, 5, 6\}\] and let \[A = \{1, 2, 3, 4\}.\] Then, \[\neg A = \{5, 6\};\] \[P(A) = \frac{4}{6};\] \[P(\neg A) = \frac{2}{6};\] and \[P(A) + P(\neg A) = \frac{4}{6}+\frac{2}{6} = 1.\]

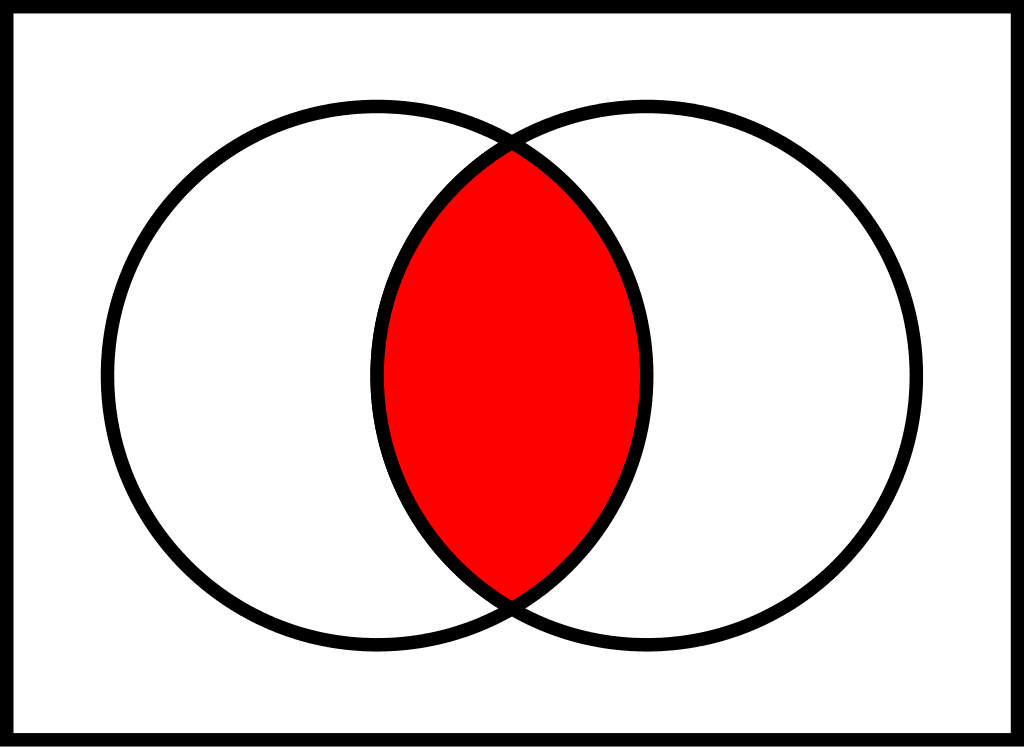

7.1.2.2 Independent events (AND-events)

Two events are independent when the outcome of the first event does not influence the outcome of the second event. For example, if you throw a dice and a coin, the number on the dice does not affect whether the result you get on the coin. More formally, two events are independent if the following are true: \[\begin{align*} P(A|B) &= P(A)\\ P(B|A) &= P(B)\\ P(A \cap B) &= P(A)P(B) \end{align*}\]

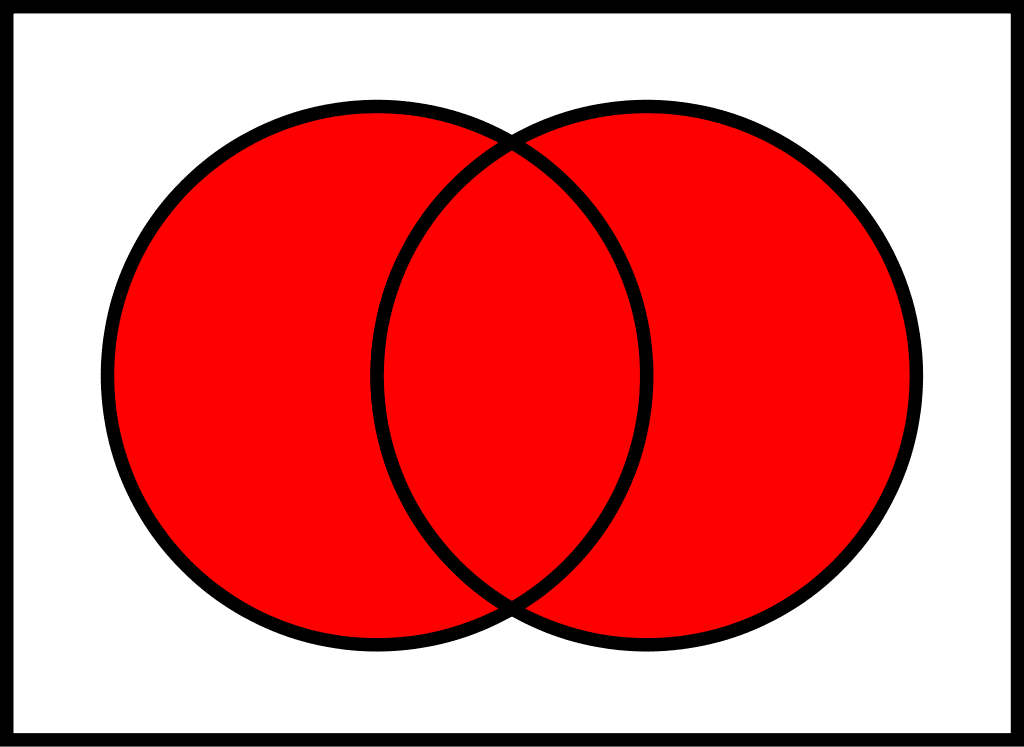

To calculate the probability of two independent events (\(X\) and \(Y\)) happen, the probability of the first event, \(P(X)\), has to be multiplied with the probability of the second event, \(P(Y)\): \[ P(X \text{ and } Y)=P(X \cap Y)=P(X)\cdot P(Y),\] where \(\cap\) stands for “and”.

For example, let \(A\) and \(B\) be \(\{1, 2, 3, 4, 5\}\) and \(\{4, 5, 6, 7, 8\}\), respectively. Then \(A \cap B = \{4, 5\}\).

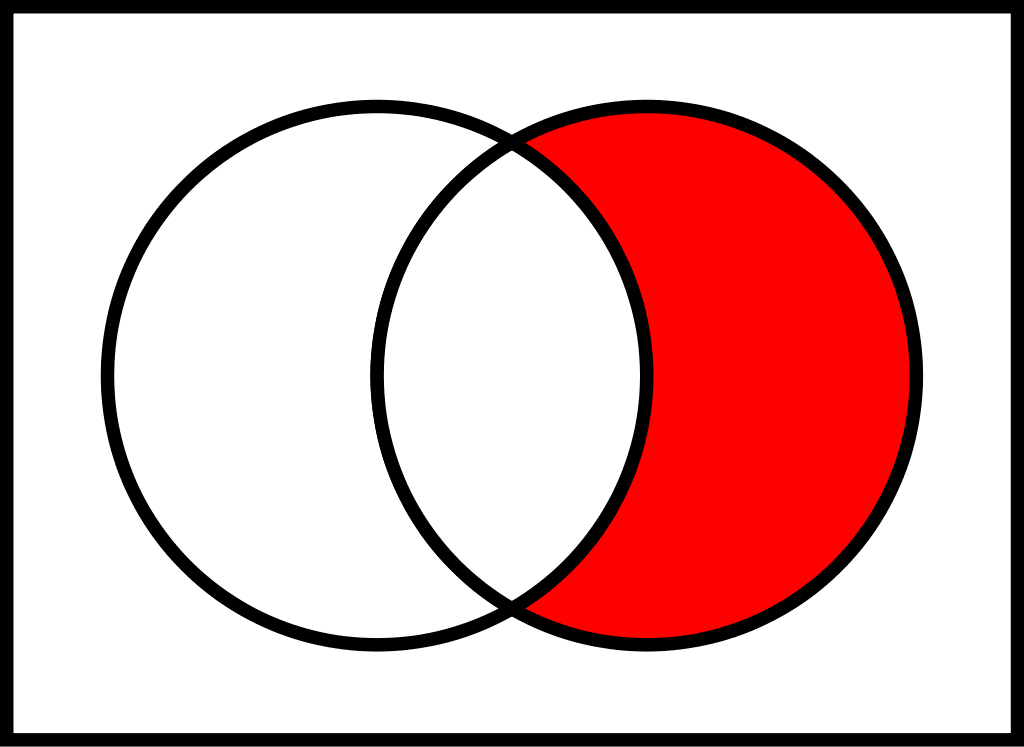

7.1.2.3 Dependent events (\(|\)-Events)

Events are dependent when one event affects the outcome of the other. If \(A\) and \(B\) are dependent events then the probability of both occurring is the product of the probability of \(A\) and the probability of \(A\) after \(B\) has occurred: \[ P(A \cap B)=P(A)\cdot P(B|A) \] where \(|A\) stands for “after A has occurred”, or “given A has occurred”. In other words, \(P(B|A)\) is the probability of \(B\) given \(A\).

Of course, the equation above can also be written as \[ \Leftrightarrow P(B|A)=\frac{P(A \cap B)}{P(A)}\cdot. \]

For example, suppose we toss a fair, six-sided die. The sample space is \(\Omega = \{1, 2, 3, 4, 5, 6\}\). Let \(A\) be 2 and 3 and let \(B\) be even (2, 4, 6). To calculate \(P(A|B)\), we count the number of outcomes 2 or 3 in the sample space \(B = \{2, 4, 6\}\). Then we divide that by the number of outcomes \(B\) (rather than \(\Omega\)).

We get the same result by using the formula. Remember that \(\Omega\) has six outcomes. \[P(A\mid B)=\frac{P(B\cap A)}{P(B)} = \frac{\frac{\text{number of outcomes that are 2 or 3 AND even}}{6}}{\frac{\text{number of outcomes that are even}}{6}}=\frac{\frac{1}{6}}{\frac{3}{6}}=\frac{1}{3} \]

7.2 Bayes’ Theorem

The conditional probability of A given B is written \(P(A|B)\). \(P(A|B)\) is the probability that event A will occur given that the event B has already occurred. A conditional reduces the sample space. We calculate the probability of A from the reduced sample space B. The formula to calculate \(P(A|B)\) is \[ P(A|B) = \frac{P\left(A\cap B\right)}{P\left(B\right)}\] where \(P(B)\) is greater than zero. This formula is also known as Bayes’ Theorem, which is a simple mathematical formula used for calculating conditional probabilities, states that \[ P(A)P(B|A)=P(B)P(A|B) \] This is true since \(P(A \cap B)=P(B \cap A)\) and due to the fact that \(P(A\cap B)=P(B\mid A)P(A)\), we can write Bayes’ Theorem as \[P(A\mid B)={\frac {P(B\mid A)P(A)}{P(B)}}.\] The box below summarizes the important facts w.r.t. Bayes’ Theorem.

For a deeper understanding of Bayes theorem, I recommend watching the following videos:

Moreover, this interactive tool can be helpful.